AWS Lambda, but more importantly the serverless ecosystem, is changing the way we can create and think our softwares on the cloud.

With serverless we can really focus on coding our service more than worrying about the infrastructure. Serverless is not useful in any occasion but there is a wide range of situation where using it becomes handy and very powerful.

For instance, let’s think for a moment our automation build pipeline: what would you say if I can tell you that you won’t need to tight them with a specific tool like Jenkins or Bamboo but you should use Jenkins or similar as luncher running multiple Lambda functions, in parallel or in sequence, levering the power of the cloud?

I can give you a concrete example, yesterday night I was doing a spike in order to generate a Javascript bundle on the cloud with Webpack.

Therefore I invested some time creating an AWS Lambda that executes Webpack for bundling a simple example that contains lodash and some ES6 code like this one:

import _ from ‘lodash’;

function component () {

var element = document.createElement(‘div’);

element.innerHTML = _.join([‘Hello’,’webpack’], ‘ ‘);

return element;

}

document.body.appendChild(component());

This is an example that you can find in the webpack official website, I used that just for the sake of the demo.

What we want to do now is the possibility to bundle this ES6 code and its library to a unique Javascript file that could be used inside our hypothetic application or website, mimicking what a step of a build pipeline would do.

Obviously you could run any other executables inside AWS Lambda, I choose Webpack because was the one used in my working place.

AWS Lambda at the rescue

If you create an automation pipeline on the cloud and maybe you don’t have many devops in your team or company, you should spend some time learning AWS Lambda, it could help out in these kind of activities.

What is AWS Lambda? Long story short: it’s a stateless docker container that is maintained by AWS where you can focus on writing the business logic of your activity more than thinking on the infrastructure.

Too good for being true? Yeah, you are right, Lambda has some limitations:

More information regarding the limits are available in the Lambda documentation website

But still the amount of things you can do with it is pretty impressive!

So, going back to our main goal, we want to bundle our Javascript project with Webpack inside Lambda, how can we achieve that?

First thing first, I created a git repository where you can find a Javascript project to simply use inside a AWS Lambda function. In this way you won’t need to create a custom project and you can focus more on the AWS side.

There are few points that I’d like to highlight in this simple project because usually are the ones that you can waste your time:

- Lambda functions can save temporary files inside the /tmp/ folder (bear in mind that you are running your code inside a docker container!).

If you try to save somewhere else you will receive an error trying executing it. - With Lambda you can run executables or node CLI tools like NPM or Webpack just uploading them inside your Lambda environment and referring to them with relative path.

- AWS Lambda runs for not more than 300 seconds therefore if you have a complex operation you could split it up in different chained Lambda functions that are triggered in sequence.

This should help you in debugging your operations too.

In the project I set up the webpack config file in this way:

var path = require('path');

module.exports = {

entry: './app/index.js',

output: {

filename: 'bundle.js',

path: path.resolve('/tmp/')

}

};

As you can see I’m saving my bundle in the tmp folder because is the only one with write permissions (remember the capacity limit of 512MB for that folder).

Then I created an index.js file where I describe my Lambda function:

var spawn = require('child_process').spawn;

var fs = require('fs');

exports.handler = (event, context, callback) => {

var wp = spawn('./node_modules/.bin/webpack', ['--config', 'webpack.config.js']);

wp.stdout.on('data', function(data){

console.log('stdout: ' + data);

});

wp.stderr.on('data', function(err){

context.fail("writeFile failed: " + err);

});

wp.on('close', (code) => {

fs.readFile('/tmp/bundle.js', 'utf8', function (err, data) {

if (err) context.fail("read file failed: " + err);

context.succeed(data);

});

});

};

Very simple code here, I’m using Node, as you can see, but potentially you could use Python or Java (these 3 languages are supported by AWS Lambda at the moment), up to you peeking your favourite.

I import the spawn method in order to run webpack and once it has finished I read the content inside the Javascript bundle file created by Webpack in the tmp folder and I return it via context.succeed method.

Context is an object, always available inside a Lambda function, that will allow you to interact with Lambda for retrieving some useful information or, like in this case, to define when the function succeed or failed.

Now we are ready to upload the application in an AWS Lambda function.

In order to do that you will need to zip your project files (not the parent folder but just the files) and upload then in AWS.

If you didn’t install the project dependencies after cloning the repository, you should do it before uploading it to AWS.

Inside your AWS console, after selecting Lambda service, you should be able to create a new function (as far as I know not all the regions are supporting AWS Lambda).

Choose your favorite language, in my case Node 4.3, and define the basic information like “function name”, “description” and so on.

Then instead of writing the Lambda function code inside the editor provided by AWS, open the dropdown and select Upload a ZIP file

Then setup the handler, role and advanced settings in this way

The important part will be setting up the docker container where the Lambda is going to be executed with enough memory size and with a decent timeout because we are running an executable therefore we don’t want to block the execution due to a Lambda timeout.

If for any reason, you need to increase the 300 seconds soft limit set by default, you will need to contact Amazon and ask to increase it.

Another important information to remember is when the Lambda is not active for a certain amount of time (it’s estimated to 5 mins), the container used by your code will be re-used for other Lambda functions.

Therefore when you will trigger your function again it will be recreated (cold Lambda), instead if the Lambda function runs several times in few mins (warm Lambda) we will have better performances because the container will be already available and live to execute a new activity again.

Now if you want to test your Lambda function, you will need to click the button test and you should have an output similar to this one:

If you want to test live the Lambda I created you can trigger it from this link

Where to go from here

Obviously the example described is very basic and it works mainly with the project I created, but it is useful to know also how you could expand this example:

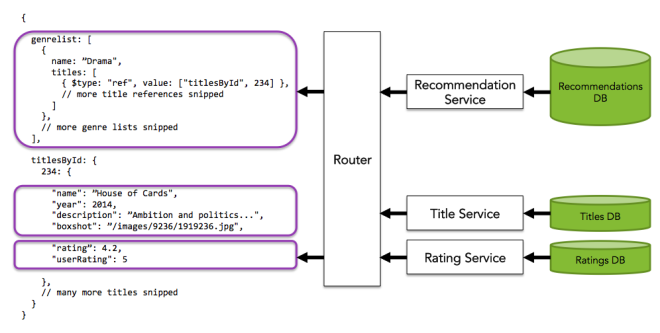

- AWS Lambda functions accept arguments passed when we trigger them, therefore potentially you could upload your project files in S3 and trigger the Lambda function directly after the upload.

In fact Lambda can be triggered by several cloud software in AWS like DynamoDB, SNS and so on; S3 is present in the list. - In order to expose the Lambda externally you will need to connect it via API Gateway, another tool provided by AWS.

In the example I shared above I configured API Gateway to trigger my Lambda function when someone is calling a specific URL. - The fastest way, and my personal recommendation, to work with AWS Lambda is via a CLI tool like Serverless Framework, you won’t need to configure manually API Gateway and your Lambda environment because Serverless Framework will provide a boilerplate to work with.

On top of that it will allow you to test your Lambda functions locally without uploading them every time on AWS.

There are many other CLI tools available but at the moment Serverless is the most complete and documented with a large community behind it. - Recently Amazon added the possibility to set environment variables for Lambda functions, therefore if your project requires them you will have the possibility to configure easily via AWS console or inside Serverless framework configuration file.

- If you don’t want to upload a CLI tool with the node_modules folder, you can create an executable with all static dependencies and upload just that file inside the ZIP file.

For Node I found a tool that works pretty well with Webpack and NPM called EncloseJS. - Remember to not abuse the power of the serverless ecosystem but understand the pros and the cons before starting using it because in some cases it’s definitely not the right choice

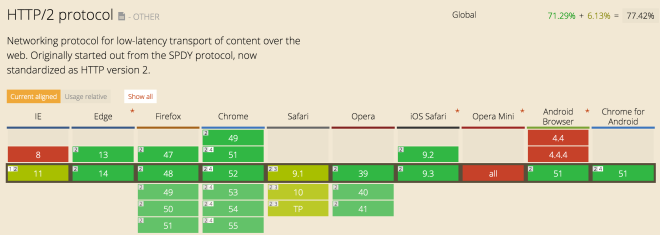

- An important consideration of the combination API Gateway + Lambda is that could work with HTTP2 protocol out of the box and you can use Cloudfront to cache your responses (also if they are dynamic) with just few configurations to set in the API Gateway console.

- With Lambda you pay just what you use, therefore if you use it for cron services, automation pipelines, triggering databse backup operations or similar, you could end up savings quite few dollars compare to an EC2 instance.